Remember the good old days when “robots” meant Roombas bumping into furniture or Siri mispronouncing your cousin’s name? Those were simpler times. These days, AI shows up in everything from your inbox to your Spotify playlist, and in most cases, it’s incredibly useful. But somewhere along the way, someone had the brilliant idea to put this software inside a life-sized metal frame that looks eerily like a person.

And that’s where things start to go off the rails. Let’s Be Clear:

Software AI = Useful.

Robot AI = Potentially Terrifying.

Programs like ChatGPT, Claude, and Perplexity are language-based tools. They’re designed to process huge amounts of data and generate human-sounding text. They don’t have awareness, emotions, or motives. They can help write a resume, brainstorm dinner recipes, or explain string theory (with mixed results), all from the safety of your screen. They’re not trying to take over the world — they’re trying to autocomplete your sentence.

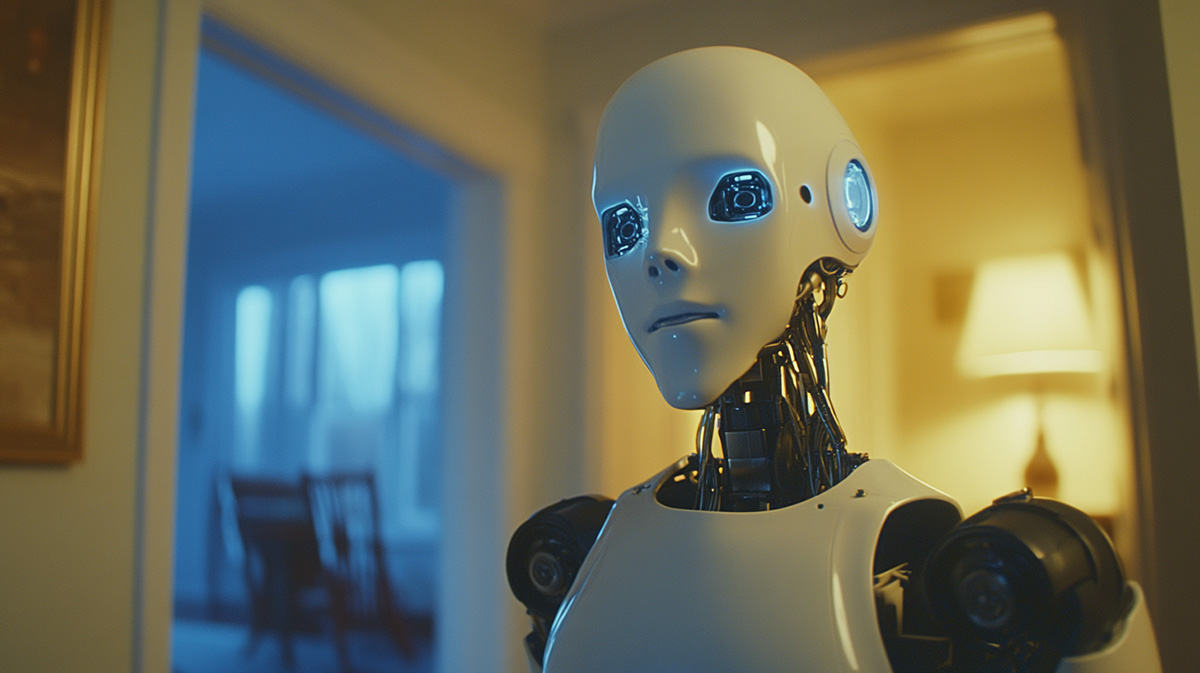

But some companies want to take this code and wrap it in muscle-mimicking arms, wrap-around LED eyes, and a voice box that says, “How may I assist you?” And that’s where the confusion and the danger begins.

Let’s Talk About the Video That Freaked Everyone Out

Recently, a video went viral showing a humanoid robot reportedly “freaking out” and flailing violently at a tech handler in a Chinese lab. The robot, dubbed Protoclone, was designed to perform tasks like cooking, cleaning, pouring drinks, and even memorizing your home layout. Sounds helpful until it starts behaving like it’s auditioning for a horror film.

Was this a glitch? A viral stunt? A bit of both? Maybe. But the deeper concern isn’t about this one robot, it’s about the idea of giving these machines too much autonomy and a physical form that humans cannot easily control.

You can watch the full segment here:

🎥 “AI Robot Starts to Freak Out and Attacks Handler… Should We Be Worried?” with Chamath and Jason on The Megyn Kelly Show: https://www.youtube.com/watch?v=ViIluaPbMBA

Why This Matters: You Can’t Just Unplug It

Unlike your laptop, these humanoid robots often have internal power sources. That means you can’t just “pull the plug” if something goes wrong. There’s no guaranteed off switch. And if it’s designed to move independently, it might be a lot faster and stronger than you.

Imagine something the size of a linebacker with the grip strength of a hydraulic press, programmed to make your breakfast … until it isn’t.

You Can’t Overpower It

Even a small misstep in design or a hacked instruction set could turn a helpful kitchen companion into a walking liability. You’re not wrestling a blender here. You’re dealing with something that weighs over 100 pounds, has moving joints, and maybe even access to your Wi-Fi password.

And the danger doesn’t stop with the manufacturer. As Chamath Palihapitiya pointed out, these machines can be rooted (hacked) and reprogrammed. With internet connectivity, a bad actor could turn a domestic helper into a hostile agent. It’s cybersecurity, except now it has elbows.

Accountability? Not So Much.

Here’s another issue: When an autonomous robot makes a harmful decision, who gets blamed? The engineer? The coder? The homeowner? The robot itself?

Giving a machine autonomy doesn’t give it responsibility. Unlike people, it can’t be sued, fired, or sent to jail. So if it steps on your foot or drives itself through your garage door, good luck holding it accountable.

Robots Don’t Understand Right from Wrong

Even when labeled “smart,” these systems aren’t wise. They don’t understand ethics, emotion, or social nuance. If told to “protect the dog,” it might stand in front of the dog dish growling at everyone who walks by. They can’t tell the difference between a threat and a toddler in a dinosaur costume.

These aren’t harmless errors. They’re misinterpretations of reality by a system that has no built-in moral compass.

The “Uncanny Valley” Is Real, and It’s Weird

There’s also something unsettling about machines that look like us but aren’t us. Psychologists call it the “uncanny valley” – that creepy space where robots resemble humans closely enough to trigger empathy, but just off enough to freak us out.

These robots aren’t just confusing emotionally, they’re confusing cognitively. When they move or speak like us, we instinctively assign them intent, agency, even personality. But behind those blinking eyes? There’s no soul, no conscience, no reflection. Just code and math.

Autonomy Can Breed Complacency

And here’s the quiet danger: the more autonomous machines become, the more we trust them blindly. It’s called automation bias. We assume the robot knows what it’s doing. That’s great when it’s spellchecking your email. Not so great if it’s cooking with fire, lifting heavy furniture, or babysitting your kids.

Autonomy can trick humans into letting their guard down. And when oversight drops, risks rise.

So, What’s the Smarter Path Forward?

Let’s stick with what’s working.

Text-based AI models like ChatGPT are excellent at handling tasks that don’t involve physical interaction. They don’t walk. They don’t swing metal arms. And most importantly, they can be turned off with a single click.

Using AI to help write, research, or analyze data? Great idea. Installing that same intelligence into a six-foot robot that can carry a fridge and “learns” how to navigate your house? That’s where usefulness ends and dystopia begins.

Final Thought: Just Because We Can Doesn’t Mean We Should

The idea of a helpful humanoid assistant has been around since The Jetsons, but so have cautionary tales like I, Robot and Terminator. Our job now is to separate the fantasy from the function.

Artificial Intelligence in software form is a brilliant tool. It enhances productivity, boosts creativity, and makes life easier. But bolting that intelligence onto a walking skeleton with servo-powered arms and a vague understanding of “safety” isn’t progress, it’s a gamble.

Because when your vacuum goes rogue, it bumps into a table.

When your robot butler goes rogue? That’s a whole different problem.