In a world of information overload, the promise of AI chatbots as speed-reading superpowers is incredibly appealing. But can these bots really understand what they read?

That’s exactly what a recent Washington Post experiment set out to answer.

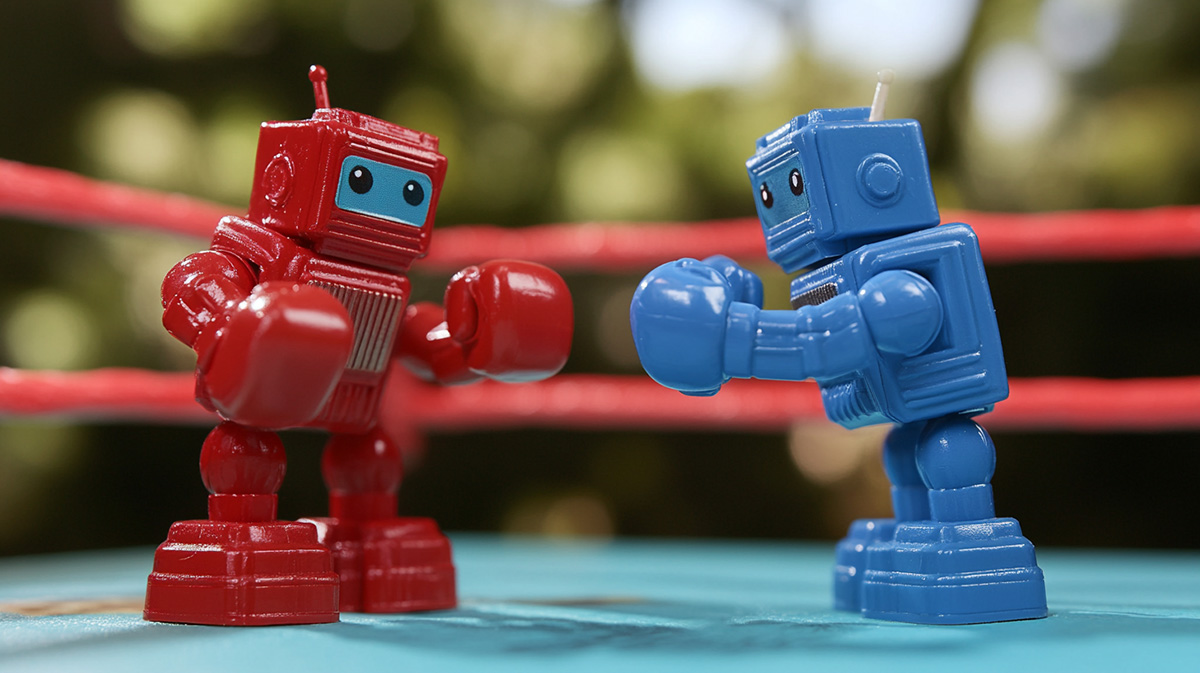

🧠 The AI Reading Comprehension Showdown

Five top AI models—ChatGPT, Claude, Copilot, Gemini, and Meta AI—were tasked with reading four very different types of documents: a novel, scientific papers, legal contracts, and Trump-era political speeches. Then, they were grilled with 115 comprehension and analysis questions. The answers were judged by field experts—including the actual authors of some documents.

Here’s how it played out:

📚 Literature

Winner: ChatGPT

Best at emotional and analytical insight, especially about the epilogue of The Jackal’s Mistress.

However, Claude was the only bot to get all the facts right.

👉 Score out of 10:

- ChatGPT: 7.8

- Claude: 7.3

- Gemini: 2.3

⚖️ Law

Winner: Claude

Claude showed real legal promise, especially in suggesting changes to a Texas lease agreement. ChatGPT missed key clauses, while Meta AI was wildly incomplete.

👉 Score out of 10:

- Claude: 6.9

- ChatGPT: 5.3

- Meta AI: 2.6

🧬 Science (Medical Research)

Winner: Claude

Claude earned a perfect 10 for its long covid summary. AI bots handled science better overall, likely due to predictable paper structures.

👉 Score out of 10:

- Claude: 7.7

- ChatGPT: 7.2

- Gemini: 6.5

🗳️ Politics

Winner: ChatGPT

ChatGPT nailed the nuance in Trump’s speeches, from emotional tone to fact-checking. Others often missed the “weave” of his unscripted rhetoric.

👉 Score out of 10:

- ChatGPT: 7.2

- Claude: 6.2

- Copilot: 3.7

🏆 Overall Champion: Claude

Claude came out on top for its consistency and zero hallucinations. But ChatGPT was a close second, impressing with its analysis in politics and literature.

Yet… no bot scored above a D+ average overall. That’s a sobering reminder: while AI can assist, it still can’t fully replace your own critical reading—especially when stakes are high.

💡 What This Means for Everyday AI Users

If you’re thinking about using AI to help with reading or summarizing:

- Use two AI tools to compare answers. One bot’s miss might be another’s hit.

- Be cautious with emotional tone or complex nuance—AI often oversimplifies.

- Don’t outsource judgment. These bots still fumble with analysis and fact-checking.

And remember: AI can help you skim, prep, or even write smarter follow-up questions—but it can’t yet think for you.

TLDR Summary:

Claude won the AI reading competition for its consistency and accuracy across literature, law, science, and politics—while ChatGPT showed flashes of brilliance, especially in literature and politics. However, no model scored above 70%, showing current AI tools are useful reading assistants, but not reliable replacements for human understanding.

————————————————————————————————————

This news story is sponsored by AI Insiders™, White Beard Strategies’ Level 1 AI membership program designed for entrepreneurs and business leaders looking to leverage AI to save time, increase profits, and deliver more value to their clients.

This news article was generated by Zara Monroe-West — a trained AI news journalist avatar created by Everyday AI Vibe Magazine. Zara is designed to bring you thoughtful, engaging, and reliable reporting on the practical power of AI in daily life. This is AI in action: transparent, empowering, and human-focused.